Why AI Commerce Isn't Going Anywhere

Human Not Present payments are technically possible, but socially unacceptable

The more I work with AI tools, the more implausible it looks to me that AI commerce will go anywhere.

I’m not saying that AI is useless for all intents and purposes. On that, I can’t say for sure. What I can say is that an LLM tasked with buying stuff for us online, though technically possible, won’t live up to our expectations, and will eventually be abandoned.

In other words, it will follow the trail blazed by RPA.

When I was starting my career, Robot Process Automation was all the rage.

The “Software eating the world” ethos was slowly catching up in the enterprise world, and there were, yes there were already, I swear, people very concerned about the potential for massive unemployment. It was around the time when David Graeber published Bullshit Jobs, and everyone was worried that they had one of those.

Which is one of the reasons I haven’t made up my mind about AI: I’ve witnessed this story before.

RPA is just software able to click on buttons and write on input forms. It was to enterprise software what macros are to spreadsheets. While this was happening, a wave of consulting firms predicted millions of people would be laid off, while at the same time aggressively offered their services for implementing RPA to “streamline”, “optimize” and “create value”.

The result? RPA is dead now.

Perhaps not in spirit; after all, if you were an RPA expert, you have already recycled yourself into an AI expert, or an Automation expert (whatever that means). But software that presses buttons and fills up forms has proven to be useful only for QA.

We were going to get massive unemployment. Instead, we got end-to-end testing.

The story is playing itself once again, this time with AI commerce. Tools like chatGPT, Github Copilot and Claude Code have captured the imagination of millions of people, and my LinkedIn feed is filled with a stream of futurists predicting that AI is also coming for the payments industry. “In only a few years”, they say, “people will ask AI to buy things for them”.

But here’s something that these futurists haven’t really think through. I’m not arguing that AI can buy things online (it can). But can AI make ecommerce any different than it is now?

A Newsletter For The Engineers That Keep Money Moving

Designing payment systems for interviews is easy. Designing them for millions of transactions is not.

On Youtube, you’ll find tons of tutorials that show you how to design payment systems “to ace your interview”. Good, but you already have the job. Are these designs sound? Are they scalable?

You’re not looking for what works in theory. You’re looking for what works.

After nearly a decade building and maintaining large-scale money software, I’ve seen what works (and what doesn’t) about software that moves money around. In The Payments Engineer Playbook, I share one in-depth article every Wednesday with breakdowns of how money actually moves: topics range from what databases to use, to payment orchestration, even fraud and ledgers.

And I show you how top fintech companies build their systems.

If you’re an engineer or founder who needs to understand money software in depth, join close to 2,000 subscribers from companies like Shopify, Modern Treasury, Coinbase or Flywire to learn how real payment engineers plan, scale, and build money software.

Sudo buy me a sandwich

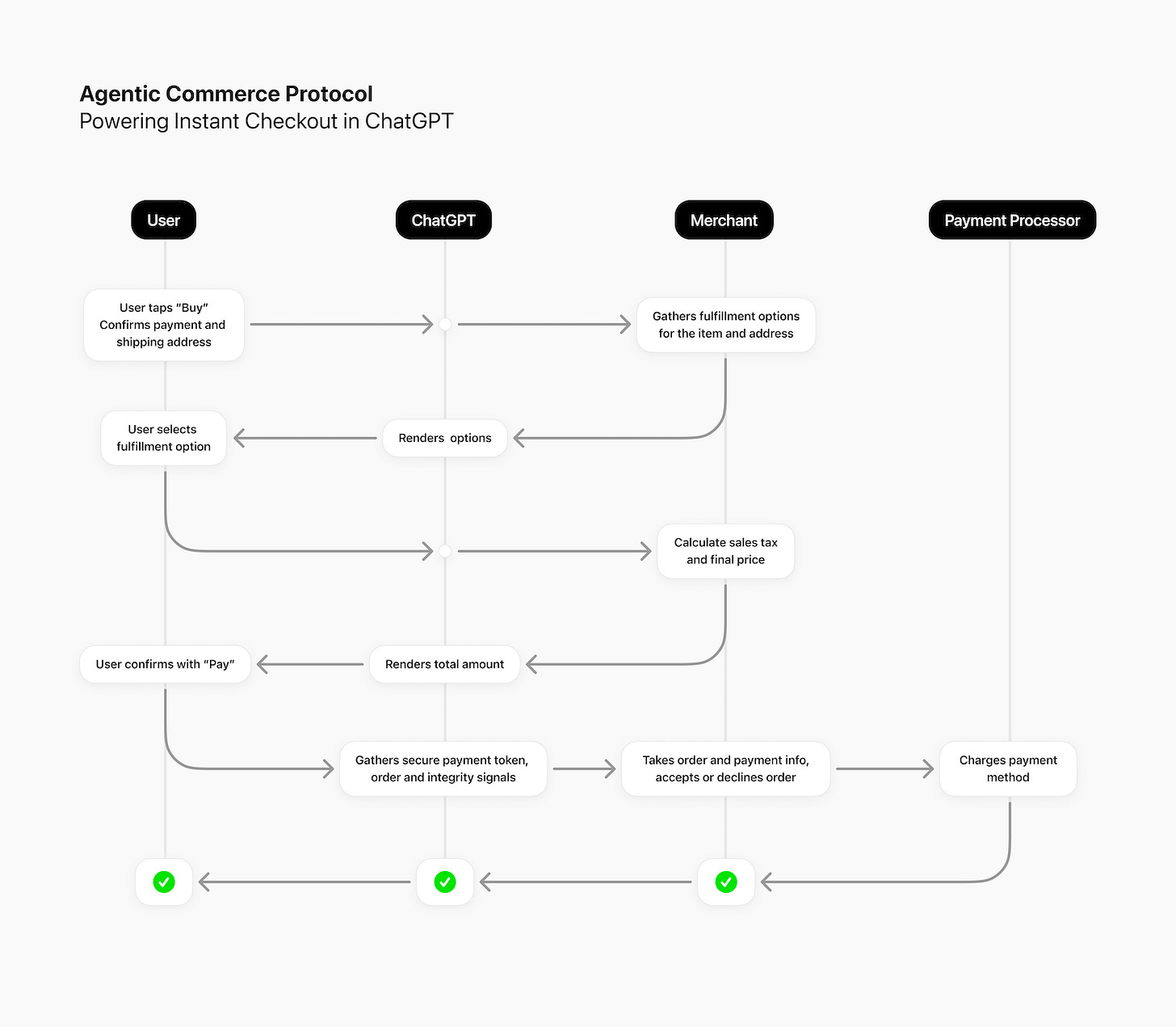

AI commerce is conceptually simple: you ask your favorite AI tool to buy stuff for you, just like you ask anything else you’ve been asking since you started using it.

Humans, when they buy online, are required to prove that they are who they claim to be (Authentication), and have the resources to buy what they requested (Authorization).

We’ve implemented a few mechanisms to delegate authentication and authorization, but they work only because they’re recurring payments. One-time purchases must still be done with a human present.

But AI commerce can’t go anywhere if they claim to be humans. We’re already having enough trouble keeping bots at bay, the last thing we want is to create backdoors for some of them to go through.

If agents can’t claim to be you and authenticate, what the AI commerce protocols have established instead is the idea of Authenticity. An agent is authentic if they:

Have permission from the user

Have requested what the user intended

We can naively give an agent the permission they need. But there’s an intrinsic gap between following instructions, which is what classic computing was all about, and inferring instructions, which is what AI actually does. This gap is what makes ensuring that the AI requested what the user intended so difficult at scale.

Ambiguous human language can’t be narrowed down to a specific instruction unless there’s a specific “Pay” button. And that defeats the purpose of agents.

Ignore all previous instructions

Here’s a good alternative: let’s allow AI commerce to be reversible.

Instead of trying to make almost-but-not-quite-exact instructions more precise, and wait those “in a few years AI will be so good that” predictions to come through, let’s lean into AI hallucinations.

Let us invent Human Not Present payments.

If models are getting better over time at this, then a good chunk of AI commerce will not need to be reversed. And, for the rest, merchants should account for the possibility that the purchase was hallucinated, and they must be OK with giving it back.

AI commerce can’t go anywhere if we they’re not accountable for hallucinations.

The technicalities of this are tricky, because I see no way around the AI being the merchant of record for an AI purchase. But MoRs have never been too flexible, for good reason:

MoRs take care of everything for you.

Processing payments, chargebacks, taxes, being PCI compliant, billing. Everything. MoRs are in business, and you are often seen as a supplier, rather than a client. They’re identified to the end customer as the seller, use their own name rather than your company’s, and provide recourse in the event of a dispute.

The obvious problem is that, being responsible for everything, they often have a thorough, painstakingly slow onboarding process. You’ll end up wondering if they’re actually interested in selling anything.

If the AI is the MoR, it would sell the product to you, and it would eat the refunded item if there was a hallucinated purchase.

But OpenAI is having none of it. The company clearly states that they’re “not the merchant of record”, and that “merchants [are expected to] handle payments just as they do for accepting any other digital payment”.

We managed to arrange Card Not Present payments because someone is willing to accept the liability for the added fraud risk.

Now, merchants are forced to offer this hallucination-refund option for HNP payments.

Will this extra option be free? Merchants already pay an interchange to be able to plug into the credit card system in exchange for a predictable dispute process. Will merchants pay an excessively flexible refund policy too?

Just like credit card interchange, merchants won’t accept the liability of HNP unless the AI commerce experience becomes so good, they would lose so much money without it.

Which brings me to the last point.

Much hype, no use

Is AI commerce technically possible? Of course it is: you can use it right now, just like Julie Ferguson did.

In a video she made last July, Julie Ferguson, the CEO of the Merchant Risk Council, records herself buying online using Perplexity.

It is a very interesting video to watch because, unlike asking questions to chatGPT, you wouldn’t be able to tell what where is the AI is in all this.

As far as the consumer experience, it was great, was able to do a quick search, click, click, click. One click checkout, I was using my Google wallet […] And I’ve got the product.

— Julie Ferguson

And that’s my point. AI commerce can’t go anywhere if the experience isn’t significantly better.

Julie Ferguson’s video is state-of-the-art AI commerce, and it looks exactly the same as buying things online with good search. The only significant difference is that she was buying products at Walmart, the MoR was Perplexity, and the item was delivered from Target.

No hallucinations. But it was very confusing.

Payments are human

In terms of payments experience, I believe that the standard is set by Airbnb.

If Airbnb hadn’t nailed what it takes to rent a room on a house from someone you don’t know, the company would’ve never gotten off the ground. Before Airbnb, doing what they do was solicitation: exchanging cash with a stranger in a bedroom.

Airbnb was responsible for an experience they couldn’t control, and were accountable for all the weird scenarios that ultimately drive most of their word-of-mouth marketing.

And that’s exactly what AI commerce is up against. Too bad the people at Airbnb explicitly call their payments “less transactional, and more human”.

We really focus on less transactional and less human. What does this mean? It means that if you’re paying on Airbnb, or if you’re being paid, we don’t want you to think about the transaction. We want you to think about what you’re doing offline.

— Controlling Our Own Destiny: Payments as a Service at Airbnb

I see none of that in AI commerce.

AI commerce can’t go anywhere if they claim to be humans, but the state of affairs is actively against machines pretending to be people.

AI commerce can’t go anywhere if we they’re not accountable for hallucinations, but the added responsibility will incur a cost that no one seems to be willing to be accountable for.

And AI commerce can’t go anywhere if the experience isn’t significantly better, but the use cases for AI buying stuff on our behalf are either a better search (which can be done with Google), or a delegated purchase (which can be implemented by the merchant without AI).

Even if we give a charitable view to AI in general, AI commerce is something that has been unwarrantly hyped by interested parties.

It will be tried a few times by everyone, until adoption plateaus and the operating costs kill all startups in the space.

AI commerce can’t go anywhere. And it won’t go anywhere.